BILL: Federal Artificial Intelligence Risk Management Act of 2023 (S.3205)

Tell your reps to support or oppose this bill

The Bill

S.3205 - Federal Artificial Intelligence Risk Management Act of 2023

Bill Details

- Sponsored by Jerry Moran (R-Kan.) on Nov. 2, 2023

- Committee: Senate - Homeland Security and Governmental Affairs

- House: Not yet voted

- Senate: Not yet voted

- President: Not yet passed

Bill Overview

- This bill directs federal agencies to use the Artificial Intelligence Risk Management Framework developed by the National Institute of Standards and Technology (NIST). If passed, it would use guidelines for agencies to incorporate AI into their workflow while managing risks. It will include standards and best practices for the use of AI.

- The legislation specifies appropriate cybersecurity strategies and tools to improve the security of AI systems.

- The Government Accountability Office will be directed to study the impact of the framework's application on the use of AI in government agencies.

What Supporters Are Saying

- Chandler C. Morse, vice president of public policy at Workday, a financial management system, said:

"As a long-standing champion and early adopter of the NIST AI Risk Management Framework, Workday welcomes today's introduction of the Federal AI Risk Management Framework Act. This bipartisan proposal would advance responsible AI by directing both federal agencies and companies selling AI in the federal marketplace to adopt the NIST Framework."

- Fred Humphries, corporate vice president of government affairs at Microsoft, said:

"Implementing a widely recognized risk management framework by the U.S. Government can harness the power of AI and advance this technology safely."

- On AI regulations, Sen. Richard Blumenthal (D-Conn.) said:

"Congress failed to meet the moment on social media. Now we have the obligation to do it on AI before the threats and the risks become real."

What Opponents Are Saying

- Some oppose government regulations more generally. For example, former Google executive chairman Eric Schmidt proposed a "leave us alone" solution. He added:

"There's no one in government who can get it [AI oversight] right...I would much rather have the current companies define reasonable boundaries."

- While OpenAI CEO Sam Altman has endorsed the idea of federal oversight of AI, he's pushed for an overarching agency that regulates the technology rather than individual bills. Additionally, Altman has been outspoken against other AI regulations, such as that of the European Union. He said in response to the body's bill:

"We will try to comply, but if we can't comply, we will cease operating [in Europe]."

Tell your reps to support or oppose this bill.

-Jamie Epstein & Emma Kansas

The Latest

-

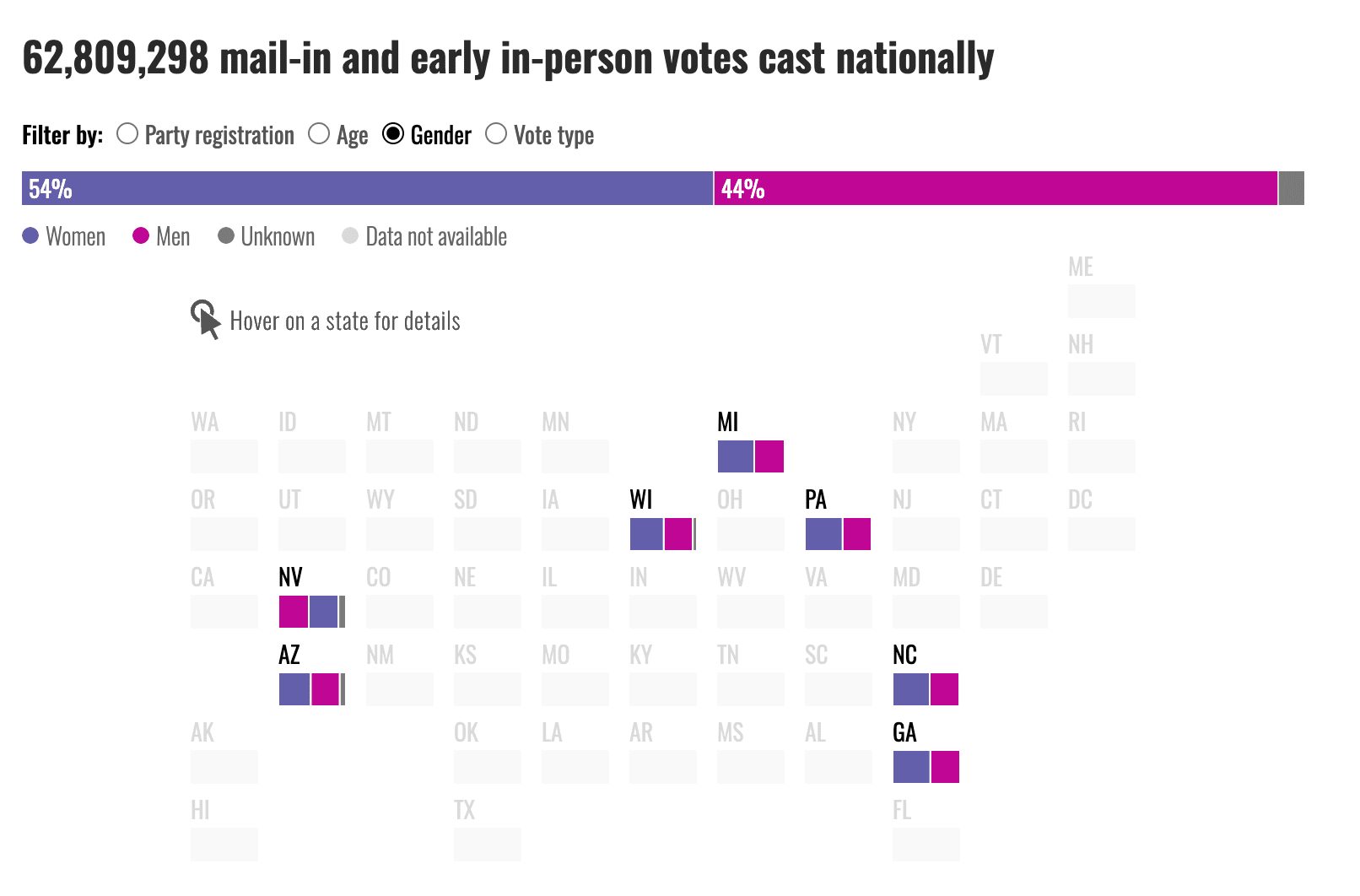

Women Are Shaping This Election — Why Is the Media Missing It?As we reflect on the media coverage of this election season, it’s clear that mainstream outlets have zeroed in on the usual read more... Elections

Women Are Shaping This Election — Why Is the Media Missing It?As we reflect on the media coverage of this election season, it’s clear that mainstream outlets have zeroed in on the usual read more... Elections -

Your Share of the National Debt is ... $105,000The big picture: The U.S. federal deficit for fiscal year 2024 hit a staggering $1.8 trillion, according to the Congressional read more... Deficits & Debt

Your Share of the National Debt is ... $105,000The big picture: The U.S. federal deficit for fiscal year 2024 hit a staggering $1.8 trillion, according to the Congressional read more... Deficits & Debt -

Election News: Second Trump Assassination Attempt, and Poll UpdatesElection Day is 6 weeks away. Here's what's going on in the polls and the presidential candidates' campaigns. September 24 , read more... Congress Shenanigans

Election News: Second Trump Assassination Attempt, and Poll UpdatesElection Day is 6 weeks away. Here's what's going on in the polls and the presidential candidates' campaigns. September 24 , read more... Congress Shenanigans -

More Women Face Pregnancy-Related Charges After Roe’s Fall, Report FindsWhat’s the story? A report released by Pregnancy Justice, a women's health advocacy group, found that women have been read more... Advocacy

More Women Face Pregnancy-Related Charges After Roe’s Fall, Report FindsWhat’s the story? A report released by Pregnancy Justice, a women's health advocacy group, found that women have been read more... Advocacy

Climate & Consumption

Climate & Consumption

Health & Hunger

Health & Hunger

Politics & Policy

Politics & Policy

Safety & Security

Safety & Security

The pros outweigh the cons, as the act provides a critical foundation for safe and ethical AI use in federal operations. However, its success depends on implementation efforts and addressing gaps related to civil rights protections and flexibility.

Recommendations for Improvement:

• Support the act but advocate for:

1. Additional funding and resources for agencies to effectively implement the framework.

2. Stronger oversight mechanisms to ensure AI systems are not misused or discriminatory.

3. Periodic reviews to refine the framework as AI technologies evolve.

By addressing these issues, the act could achieve its intended goals while mitigating its potential drawbacks.

Artificial InteIligence is mostly a scam that will cause many times more problems than it solves. It's primary use will be to defraud Billions of people. In fact, it has already been used to deny medical treatments, medications and medical insurance reimbursement. Medical insurance companies hate paying for the "members" medical care and medications. Now they can blame AI for denials of treatment and denials of reimbursement.

It's a cheap way to make a ton of money!

ai is not a butlet, it is the aggregation of past and present data and inferences made based on that data. when it comes to deep faking i agree that is an issue, but there in lies the fact that people. ust e educated on what that fact is. rather than make blind and often untilligeabkeit comments on what it means it and what it can actually do. ai is a huge benefit, but just like anything else used in the wrong hands it is a weapon . think of a hammer -- in one hand, it can be used to build a house, on the other, it can be used to bludgeon someone to feath. AI si==is the same. i apologize fopr uing somewhat course language but6 he facs are the facts, "guns dont kill people,. untrained and uneducated people do"

AI is need in the Supreme Court sense the men on the Supreme Court are lacking intellect and a spine.

I asked copilot to write a sonnet about an apple’s unrequited love for a silver spoon.

Its response was fairly good. Be afraid of what this can do for propagandists and those aiming to sow division in the world’s democracies.

Upon the orchard's bough, there hangs an apple, red and round,

Whose heart beats for a spoon of silver, silent and profound.

Though nestled close in drawer's embrace, they're worlds apart,

For one is fruit of nature's womb, the other, crafted art.

The apple watches daily as the spoon with others lies,

Reflecting light and laughter, under bright and cheery skies.

Yet never does the spoon glance back, nor knows the apple's sighs,

Unaware of longing from the tree where it resides.

In dreams, they dance, a waltz of whimsy, sweet and fair,

The apple's blush meets silver gleam in cool, nocturnal air.

But dawn does break, and with it, so does fantasy's thin veil,

The apple's love, unrequited, in the daylight turns to pale.

Yet still it holds, this tender fruit, a hope within its core,

That spoon might fall, by fate's kind hand, and love it evermore.

But till that day, it loves in vain, with heart both bruised and sore,

An apple's love for silver spoon, a legend, nothing more.

https://www.bbc.com/news/articles/cnd607ekl99o

99% sheep 1% Oligarchs

a few greedy scumbags

We have been heading in this direction for decades albeit in slow cycles but now with rapid transformation

so why are we allowing it to happen

how long are free handouts for the masses going to last? pretty soon there will be no consumers left - who will sell what to whom? back to the farmstead? Oops bill gates and his ilk bought up all the farmland?

First major attempts to regulate AI face headwinds from all sides

https://apnews.com/article/58eab95d279648e759f9099ca4249f00

DENVER (APNews) — Artificial intelligence is helping decide which Americans get the job interview, the apartment, even medical care, but the first major proposals to reign in bias in AI decision making are facing headwinds from every direction.

Lawmakers working on these bills, in states including Colorado, Connecticut and Texas, came together Thursday to argue the case for their proposals as civil rights-oriented groups and the industry play tug-of-war with core components of the legislation.

“Every bill we run is going to end the world as we know it. That’s a common thread you hear when you run policies,” Colorado’s Democratic Senate Majority Leader Robert Rodriguez said Thursday. “We’re here with a policy that’s not been done anywhere to the extent that we’ve done it, and it’s a glass ceiling we’re breaking trying to do good policy.”

Organizations including labor unions and consumer advocacy groups are pulling for more transparency from companies and greater legal recourse for citizens to sue over AI discrimination. The industry is offering tentative support but digging in its heels over those accountability measures.

More:

NYTimes: The Worst Part of a Wall Street Career May Be Coming to an End

April 10, 2024Updated 11:52 a.m. ET

https://www.nytimes.com/2024/04/10/business/investment-banking-jobs-artificial-intelligence.html?

<Quote>

Artificial intelligence tools can replace much of Wall Street’s entry-level white-collar work, raising tough questions about the future of finance.

Pulling all-nighters to assemble PowerPoint presentations. Punching numbers into Excel spreadsheets. Finessing the language on esoteric financial documents that may never be read by another soul.

Such grunt work has long been a rite of passage in investment banking, an industry at the top of the corporate pyramid that lures thousands of young people every year with the promise of prestige and pay.

Until now. Generative artificial intelligence — the technology upending many industries with its ability to produce and crunch new data — has landed on Wall Street. And investment banks, long inured to cultural change, are rapidly turning into Exhibit A on how the new technology could not only supplement but supplant entire ranks of workers.

The jobs most immediately at risk are those performed by analysts at the bottom rung of the investment banking business, who put in endless hours to learn the building blocks of corporate finance, including the intricacies of mergers, public offerings and bond deals. Now, A.I. can do much of that work speedily and with considerably less whining.

<End Quote>

More:

Essentially, given our political system’s numerous flaws, we should not be overly optimistic as to what S. 3205 can accomplish if it is passed and signed into law.

• The bill requires federal agencies to adopt the Artificial Intelligence Risk Management Framework (AI RMF) developed by the National Institute of Standards and Technology (NIST). The implementation could be challenging given the complexity of AI technologies and the varying capabilities of different agencies.

• While the bill does emphasize cybersecurity in AI systems, ensuring the security of these systems could be a significant challenge given the evolving nature of cyber threats.

• Critics have a legitimate argument in saying that the bill could lead to regulatory overreach, potentially stifling innovation in AI.

• The effectiveness of the NIST guidelines in managing AI risks could be a concern. If the guidelines are not comprehensive or fail to keep up with the rapid advancements in AI, they might not fully address the risks.

• The bill mandates regular reporting, studies, and the development of standards, which could be resource-intensive for agencies, thus subject to the whims of Congressional Funding.

For the full text of S. 3205, visit https://www.congress.gov/118/bills/s3205/BILLS-118s3205is.pdf

To see the registered lobbyists see S.3205 Lobbyists • OpenSecrets

https://www.opensecrets.org/federal-lobbying/bills/lobbyists?cycle=2023&id=s3205-118

(What do they hope to gain?)

The Key Provisions of S. 3205

S. 3205, the Federal Artificial Intelligence Risk Management Act of 2023, has several key provisions:

• Artificial Intelligence Risk Management Framework (AI RMF): The bill requires federal agencies to use the AI RMF developed by the National Institute of Standards and Technology (NIST).

• NIST Guidelines: NIST is required to issue guidelines for agencies to incorporate the framework into their AI risk management efforts. These guidelines should provide standards, practices, and tools consistent with the framework and how they can leverage the framework to reduce risks to people and the planet for agency implementation in the development, procurement, and use of AI.

• Cybersecurity Strategies: The guidelines should specify appropriate cybersecurity strategies and the installation of effective cybersecurity tools to improve the security of AI systems.

• Office of Management and Budget (OMB) Guidance: The OMB must issue guidance requiring federal agencies to incorporate the framework and the guidelines into their AI risk management efforts.

• Government Accountability Office (GAO) Study: The GAO shall study the impact of the application of the framework on agency use of AI.

• Agency Reporting: The OMB must periodically report to Congress on agency implementation and conformity to the framework.

• AI Expertise Initiative: The OMB must establish an initiative to provide expertise on AI pursuant to requests by agencies.

• NIST Studies and Standards Development: NIST shall complete a study to review the existing and forthcoming voluntary consensus standards for the testing, evaluation, verification, and validation of AI acquisitions.

A good 1st step though it doesn't go as far as the EU legislation it does implement current industry standards used in U.S. and international companies, U.S. federal and foreign governments and several U.S. states.

Bipartisan Legislation which has a better chance than average of making it out of committee and being enacted which implements The National Institute of Standards & Technology (NIST) Artificial Intelligence Risk Management Framework (AI RMF, 2023) which is a cybersecurity framework already used by most large U.S. companies as well as many large foreign companies, the federal government, 20 U.S. states, several foreign governments (Canada, Italy, Switzerland, UK) and international organizations (OAS).

While being an industry standard due to its flexibility it doesn’t go as far as the EU legislation which focuses on regulating the 5%-15% of AI systems deemed to be high risk due to impact on human rights or safety.

The EU framework contained in The Artificial Intelligence Act (2021), which was approved (2022) in order to develop AI products that can be trusted by classifying AI systems by risk (high risk product covered by safety legislation, high risk human service, interacts with humans) and mandate various development and use requirements in order to put in place appropriate safeguards, monitoring, and oversight.

“The cybersecurity framework has been applied by a large majority of U.S. companies and seen notable adoption outside the U.S., including by the Bank of England, Nippon Telephone & Telegraph, Siemens, Saudi Aramco, and Ernst & Young. The federal government mandates its use by federal agencies, and 20 states have done likewise. Various federal agencies (most notably the Securities and Exchange Commission) use the cybersecurity framework as a benchmark for sound cybersecurity practices in regulated industries.”

“While the flexible approach does not ensure adoption, it avoids some of the challenges the much more ambitious EU AI Act faces in the EU’s legislative process”

“As the Commission set out to frame legislation and gathered input, its focus narrowed toward specific use cases, leading to its “risk-based” proposal to regulate AI systems deemed “high-risk” because of their impact on rights of individuals or on safety. While this is sometimes described as “horizontal,” it is less so than NIST’s AI RMF or the AIBOR. Indeed, the Commission estimated in its proposal that only 5-15 percent of AI systems would be subject to the regulation.”

https://www.causes.com/comments/95791

https://www.brookings.edu/research/the-eu-ai-act-will-have-global-impact-but-a-limited-brussels-effect/

https://www.politico.eu/article/eu-plan-regulate-chatgpt-openai-artificial-intelligence-act/

https://digital-strategy.ec.europa.eu/en/policies/european-approach-artificial-intelligence

https://www.weforum.org/agenda/2023/03/the-european-union-s-ai-act-explained/

https://hbr.org/2021/09/ai-regulation-is-coming

https://www.brookings.edu/articles/nists-ai-risk-management-framework-plants-a-flag-in-the-ai-debate/

https://www.govtrack.us/congress/bills/118/s3205

It sounds like a good idea, however I'd want to see the fine print to make a final determination.

Trump proves that artificial intelligence doesn't work!

We need regulation and oversigh of AI now, before it gets too far ahead of regulators like social media has done.

I'm sure this bill isn't perfect, and I'm sure we can do more, but Congress is failing us all currently by doing nothing.

Please support this bill and see that it's passed while you continue finding other ways to protect American workers, thinkers, and artists from AI.

The absolute WORST one to be allowed control of AI is government especially when they are in bed with the mega corporations where the same people are running both and are in the business of war, as ours is? Can you name any time where government involvement made any thing better? Over and over they lie to us again and again and again. How stupid can we be to entrust them with our best interest?? AI should be made open source where no one owns it or can hide what they do with it because it can be seen by ALL, all the time. Give it to government and it'll be made a weapon. Bet on it!